What is Data Integrity?

Data integrity is the assurance that data meets quality standards at all times. Data consumers interact with data with the assumption that it is reliable. Because of this perception, it is also not difficult to imagine data consumers that believe the data they use is immutable and truthful. So much trust they have in data that they make life decisions based on it. Therefore, it is important to ensure that data is reliable.

Understanding Data Integrity

Accurate data represents information faithfully, reflecting the true values and context of the source it was derived from without errors or misrepresentation. Complete data includes all required elements and remains in its full form, unfiltered or truncated, ensuring that no essential pieces are missing that could compromise analysis or insights. Consistent data maintains uniformity across different systems, formats, and stages of processing meaning that whether the data is accessed from a reporting tool, a data warehouse, or an application, it appears the same and behaves predictably.

While data can be transformed to enhance quality or usability, such transformations should follow clearly defined rules to ensure transparency and repeatability. Valid data has been verified through checks to ensure it represents real-world conditions accurately, for example, that phone numbers are active and reach real people, or that email addresses are authentic, deliverable, and not fraudulent.

Ultimately, data integrity is the condition of data being whole, accurate, consistent, and trustworthy, protected from unauthorized modifications, corruption, or loss throughout its lifecycle. This includes not only the technical enforcement of data quality but also the governance and monitoring frameworks that ensure data remains reliable for both operational and analytical use.

Data Integrity vs. Data Quality

Data integrity and data quality are often used interchangeably, but they refer to distinct concepts within the broader discipline of data management. While both are essential for ensuring that data is trustworthy and useful, they focus on different aspects of data reliability.

Data integrity refers to the completeness, consistency, and accuracy of data throughout its entire lifecycle. It focuses on maintaining the structure and reliability of data as it is created, stored, processed, and accessed. Data integrity ensures that data is not altered inappropriately, lost, or corrupted, whether through human error, technical failure, or malicious activity. It is enforced through controls such as access management, validation rules, audit trails, error detection mechanisms, backups, and encryption. It also includes technical practices like referential integrity in relational databases and transaction controls to ensure that data changes are tracked and reversible if needed.

Data quality refers to how useful and fit for purpose the data is within a given context. It is primarily concerned with the condition of the data rather than its lifecycle management. High-quality data is not only accurate and consistent, but also timely, relevant, and complete based on the business or analytical use case. Improving data quality often involves data profiling, cleansing, standardization, enrichment, and ongoing monitoring. It’s about making sure the data not only exists but also delivers value by supporting operational efficiency, analytics, and decision-making.

Data Integrity vs. Data Security

Data integrity and data security are two foundational concepts in data management, and while they are closely related, they serve distinct purposes and address different aspects of protecting and managing data.

Data integrity refers to the accuracy, consistency, and completeness of data throughout its lifecycle. It ensures that the data stored, processed, or transmitted remains unchanged from its original state unless properly authorized and documented. This means that data must be free from unauthorized modifications, corruption, duplication, or loss. It focuses on making sure that data truly represents what it is supposed to, and that any transformations or updates to the data are legitimate, traceable, and reversible if needed. High data integrity allows organizations to make decisions based on reliable and unaltered information.

Data security, on the other hand, is focused on protecting data from unauthorized access, breaches, theft, or malicious attacks. It encompasses the tools, technologies, policies, and practices that safeguard sensitive data from both internal and external threats. This includes encryption, authentication, access control, firewalls, intrusion detection systems, and data masking. Data security ensures that only authorized users and systems can access or manipulate data, and it defends against cyber threats such as hacking, malware, ransomware, and phishing attacks. While data security does not inherently ensure that data remains correct or consistent, it plays a critical role in preventing unauthorized actions that could compromise data integrity.

The key difference lies in what each concept protects. Data integrity ensures the correctness and reliability of data, whereas data security protects the confidentiality, availability, and access control surrounding that data. In practice, these two areas must work together. For example, if a system is not secure, a breach could compromise data integrity by altering or deleting records. Conversely, if data is corrupted due to software errors or hardware failure, integrity is lost even if the data was never accessed by an unauthorized user.

Why is Data Integrity Important?

Today, the data landscape is vast and rapidly expanding. Data flows through every part of our personal and professional lives from the apps we use, to the purchases we make, to the devices we wear, and businesses generate even more through internal systems, customer interactions, and third-party sources. In this environment, organizations that recognize the strategic value of data and can transform raw data into actionable business insights have a significant competitive advantage. However, the sheer volume, velocity, and variety of data being produced also introduces a great deal of noise as redundant, inconsistent, incomplete, or irrelevant information can obscure true signals.

This is where data integrity becomes essential. To turn data into a reliable resource for analytics, it must first be refined, filtered to remove inaccuracies, standardized for consistency, and enriched for contextual understanding. Once cleaned, combined across sources, and transformed into usable formats, high-integrity data allows organizations to extract meaningful insights, drive informed decisions, and fuel intelligent systems such as AI and machine learning.

But ensuring data integrity doesn’t end after initial processing. It must be actively maintained through validation rules, monitoring, governance frameworks, and security protocols that preserve its accuracy, completeness, and reliability over time. Without this ongoing care, data can quickly become stale, corrupted, or misleading, eroding trust, weakening outcomes, and potentially leading to costly errors. In short, maintaining data integrity is what enables data to remain a durable, high-value asset capable of delivering long-term business impact.

What Are the Types of Data Integrity?

A holistic perspective on how data is kept within an organization is required to comprehensively safeguard data integrity. So while there is emphasis on managing data sets and data access, in order to ensure data remains faithful, accurate, complete, consistent, and secure, where and how data is physically stored must also be considered. Broadly, logical data integrity and physical data integrity must be considered.

Physical Integrity

Maintaining data integrity begins with protecting and securing the physical infrastructure where data lives. This generally means taking protective measures over memory and storage hardware, but as more data services are operated from the cloud, this becomes less of an organizational in-house responsibility. In fact, in many cases cloud providers offer superior data services compared to the capabilities of many small IT teams—companies can save tremendously in both costs and time by judicially shopping for cloud data services. In other cases, cloud providers can augment the data strategy of enterprises in need of diversifying their global data infrastructure.

In either case, the providers assume responsibility for ensuring data integrity by protecting physical infrastructure from multiple threats:

- Infrastructure and hardware faults and failures

- Design failures

- Environmental impacts that cause equipment deterioration

- Power outages and disruptions

- Natural disasters

- Environmental extremes

Some key techniques that cloud providers and enterprises may design into their systems to ensure infrastructure protection and thereby data integrity include:

- RAID or other redundant storage systems with battery protection

- Error-correction memory

- Cluster and distributed file systems

- Error detection algorithms to protect data in transit

- Generator back-ups

Redundancy is clearly a key principle for the physical protection of data, and assuming a perfect physical system, we can turn to the logical aspect of data integrity.

Logical Integrity

Logical data integrity is concerned with the data itself. Whether that data makes sense given business context and trends, and whether adjustments must be made because assumptions have changed. In essence it’s concerned with the fitness of the data to real life needs. At a technical level, logical integrity is concerned with four main principles.

- Entity integrity — establishing rules that there are no duplicate data elements and no missing critical fields.

- Referential integrity — establishing rules for how data is stored, preventing data duplications, and controlling authorization for data modifications.

- Domain integrity — ensuring that formats, types, value ranges and other attributes fall within acceptable parameters.

- User-defined integrity — additional rules, such as business rules, that help to further constrain the data to the needs of the organization.

How is Data Integrity Ensured?

To ensure data integrity requires a framework that not only takes into account infrastructure protection and logical data rules, but also extends into the human aspect of the organization. The following broad guidelines can help organizations maintain strong data integrity.

- Employee Training — Employees must be kept in the know about data responsibilities. Companies implementing data governance in the right way will have established a data governance steering committee, a data governance council, as well as data stewards responsible for specific data and processes.

- Establish a Data Integrity First Culture — With the establishment of training for employees, the company culture will be on its way to becoming a Data Integrity First Culture, which means they should be a data-first culture, valuing data as an asset. With the right buy-in, data is treated as the asset it can be, employees look for uses with it, and the company can work to improve its understanding of its data. Without buy-in, data efforts will flounder.

- Validate Data — Data validation is a non-starter. All data should be validated for acceptability before entering into the system. Integration scenarios will increase because more businesses are buying data from third-parties, such as performance marketers who may be paid per email they deliver. Ensuring these emails are valid helps to reduce payouts for false emails while building a reliable email database.

- Reasonably Cleansed Data — Preprocessing of data helps to remove duplicate entries, and other errors. However, any cleansing and standardization should produce a data set that is reasonably more reliable and useful than its previous state.

- Back-up and Protect Data — Periodic back-ups, especially in multiple locations, is a standard practice for protecting data integrity. The top tier cloud services automatically do back-up and data protection.

- Implement Security — Cloud providers implement all the accepted industry security features, encryption, authentication and authorization, and access logs, that enterprises should use in order to secure their data.

Examples of Data Integrity Issues

High data integrity results from establishing proper data management and governance practices. People, processes, and technology continue to play the same roles across many domains, and in data, by focusing on improving systems in these areas, data integrity will be improved and ultimately the insights drawn on that data can propel business decisions.

The following are seven common data integrity issues that hinder data integrity.

- Poor or Missing Data Integration Tools — There are a number of data integration tools on the market for any sized business which makes data handling worthwhile. Analyzing multiple data sources without these tools can quickly become cumbersome, and error prone. Furthermore, adopting standard data integration tools eliminates many of the data integrity issues discussed below.

- Manual Data Entry and Data Collection Processes — Manual processes introduce human error. Reduce errors by installing data validation, standardization, and other data checks. Ideally, machine data entry is much more reliable.

- Multiple Tools to Process and Analyze Data — Tools come and go as new application technologies emerge, so it’s not unusual to accumulate many tools to support data operations. It’s simply a good idea to audit these tools periodically as to their usefulness, their conflicts with other tools, and the potential for consolidating tools into newer more advanced and capable data platforms.

- Poor Audit Records — Data adjustments and changes to data integrity rules all must be documented. Failure to record a historical audit of data changes leaves companies in the dark about their progress and will negatively impact operations and data analysis. Without reference, changes to data are made blind.

- Legacy Systems — Like software outgrowing hardware, data outgrows software systems and the infrastructure they run on. Legacy systems eventually fall behind, it is advised to be proactive about upgrading data infrastructures before it becomes costly to switch.

- Inadequate Data Training — Data is complex, and every stakeholder should have the appropriate training to ensure handling data properly. Adequate data training ensures that all users understand both upstream and downstream needs and responsibilities of data stakeholders, and they know their own responsibilities that help support data as a critical, strategic asset.

- Inadequate Data Security and Maintenance — Security and maintenance leaks introduce process flaws that can corrupt data. Employees logging in on the same user ID can introduce data errors by accident, let alone loose credentials can allow intruders to steal or delete data.

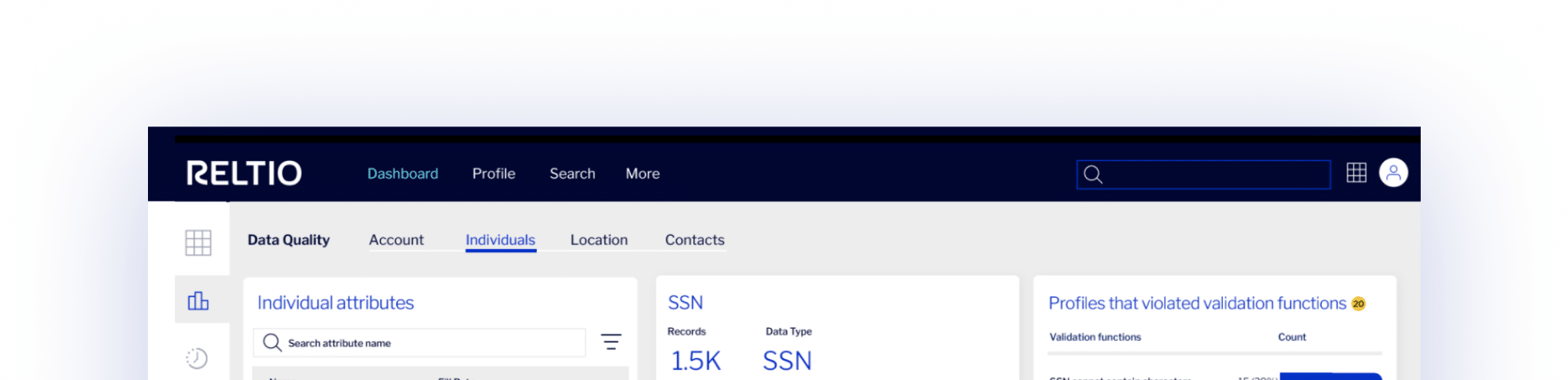

Some or all of these operations above can be handled by data quality tools or Master Data Management platforms.

Ready to see it in action?

Get a personalized demo tailored to your

specific interests.