What is Data Lineage?

Data lineage is a critical aspect of modern data management, as it provides a comprehensive view of the origin, movement, and transformation of data as it flows through various systems and processes. With the growing complexity of data environments, it has become increasingly important to maintain accurate and reliable data lineage information to ensure compliance, facilitate troubleshooting, and support informed decision-making.

Understanding Data Lineage

Data lineage is the process of tracing the flow of data through an organization, from its original source to its final destination. It involves identifying the origin of the data, understanding how it was transformed or manipulated along the way, and tracking its use throughout its lifecycle.

Data lineage provides important information about the quality, reliability, and trustworthiness of data. By understanding the lineage of a particular data set, organizations can determine its accuracy, identify potential issues or errors, and trace any changes or modifications that have been made to it over time. This can be particularly important for regulatory compliance, data governance, and risk management purposes.

Data lineage can be represented in a variety of ways, including diagrams, charts, and other visualizations that show the flow of data through different systems and processes. There are also a number of tools and technologies available that can automate the process of capturing and analyzing data lineage, making it easier for organizations to manage and maintain their data assets.

Data Lineage vs. Data Classification

Data lineage and data classification are two different concepts that are both important for effective data management.

Data lineage refers to the process of tracking the flow of data through an organization, from its original source to its final destination. It involves identifying the origin of the data, understanding how it was transformed or manipulated along the way, and tracking its use throughout its lifecycle. Data lineage provides important information about the quality, reliability, and trustworthiness of data.

Data classification, on the other hand, refers to the process of categorizing data based on its level of sensitivity or criticality to the organization. This involves identifying different types of data, such as personally identifiable information (PII), financial data, and confidential business information, and assigning appropriate security controls and protection measures based on their classification.

While data lineage and data classification are different concepts, they are both important for effective data management. Data lineage helps ensure the accuracy and reliability of data, while data classification helps ensure that data is protected appropriately based on its sensitivity and criticality. Together, they can help organizations effectively manage their data assets and meet their regulatory and compliance requirements.

Data Lineage vs. Data Provenance

Data lineage and data provenance are related concepts that both deal with the history and origins of data, but they differ in scope, focus, and application.

Data lineage refers to the end-to-end tracing of data as it moves through an organization’s systems, from its point of origin to its final use. This includes understanding where the data came from, how it has been ingested, transformed, enriched, or aggregated, and where it ultimately resides whether in a database, dashboard, machine learning model, or report. Data lineage diagrams or tools typically illustrate the entire lifecycle of data, mapping out each transformation step, system interaction, and dependency along the way. This comprehensive visibility is crucial for impact analysis, troubleshooting data quality issues, ensuring regulatory compliance, and supporting data auditing and governance.

Data provenance, while sometimes used interchangeably with data lineage, focuses more narrowly on the origins and original context of a data asset. It captures information about where the data was sourced, who generated or authored it, when it was created, and under what conditions or methods it was collected. Provenance provides critical context that allows users to evaluate the trustworthiness, accuracy, and legitimacy of data especially important in scientific research, legal documentation, and any setting where the credibility of data must be validated.

In essence, data provenance is concerned with where data comes from, while data lineage maps out where it goes and what happens to it. Both are key components of a robust data governance strategy and are increasingly supported by specialized metadata management and data cataloging tools that automate the tracking and documentation process.

Data Lineage vs. Data Governance

Data lineage and data governance are two fundamental concepts in the field of data management, each serving a distinct but complementary purpose in ensuring that data is accurate, trustworthy, and responsibly handled throughout its lifecycle.

Data lineage refers specifically to the process of tracing and documenting the flow of data as it moves through an organization’s systems from its original source, through various transformations and processing stages, to its final destination or use. This includes capturing details such as which applications or systems accessed the data, what transformations or calculations were applied, and where the data is stored or consumed. Lineage provides a detailed, step-by-step map of how data elements are connected across databases, ETL pipelines, analytics tools, and reporting platforms. The primary goal of data lineage is to provide transparency, enable traceability, and support root cause analysis in the event of data quality issues. It is also crucial for understanding the downstream impact of changes to data sources or processes and for ensuring the integrity of data used in decision-making, analytics, and compliance reporting.

Data governance, in contrast, is a broader organizational framework focused on establishing and enforcing the policies, standards, roles, responsibilities, and processes needed to manage data as a strategic asset. It encompasses a wide range of data-related activities, including setting data quality standards, managing metadata, assigning data ownership, ensuring data privacy and security, defining access controls, and maintaining compliance with industry regulations such as GDPR, HIPAA, or CCPA. Effective data governance also involves building a culture of accountability and stewardship, where individuals and teams are empowered and obligated to manage data properly according to predefined rules and ethical guidelines.

While data lineage is often considered a technical function and data governance a strategic one, they are tightly interrelated. Data lineage provides the visibility and auditability that data governance requires to function effectively. Together, they form the backbone of a strong data management strategy that promotes trust, accountability, and business value from data assets.

Coarse-Grained vs. Fine-Grained Data Lineage

Coarse-grained and fine-grained data lineage represent two distinct levels of detail in tracking how data moves and transforms within an organization’s data ecosystem. Both are valuable in different contexts, depending on the goals of data governance, auditing, and operational insight.

Coarse-grained data lineage offers a high-level, summary view of data movement. It typically tracks the flow of data between major systems, platforms, or organizational units. For example, it might show that data is extracted from a CRM system, passed through a data pipeline, loaded into a data warehouse, and then accessed by a BI tool for reporting. This level of lineage illustrates the source-to-destination flow of entire datasets or tables, without diving into the internal transformations or the specific data fields involved. Coarse-grained lineage is often used for compliance documentation, system-level impact assessments, and architectural overviews, as it helps organizations understand the broader movement of data across systems, applications, and business domains.

Fine-grained data lineage, on the other hand, delivers a high-resolution view of data flow by tracking transformations at the level of individual data elements or columns. It not only captures where each data field originates, but also shows how it is calculated, aggregated, filtered, or modified throughout its lifecycle down to specific transformation logic, formulas, or code-level functions. Fine-grained lineage is essential for data quality validation, root cause analysis, debugging, and understanding dependencies, especially when dealing with regulated data such as personally identifiable information or financial metrics. It provides the traceability needed to explain exactly how a data point was derived crucial for audits, compliance, and accurate reporting.

In practice, organizations often leverage both types of lineage together. Coarse-grained lineage helps teams quickly map overall data flows, while fine-grained lineage supports deeper analysis and operational troubleshooting. Together, they enable a more comprehensive and scalable approach to data observability, governance, and trust.

How Data Lineage Works

Understanding how data moves through an organization’s systems requires visibility into the context that surrounds it and that’s where metadata plays a crucial role. Often described as “data about data,” metadata provides essential details about a data asset, such as its type, structure, format, creator, creation and modification dates, and file size. Within data lineage tools, metadata offers the underlying intelligence that allows users to trace how data is sourced, transformed, and used across the pipeline. This transparency helps teams assess data relevance, trustworthiness, and usability.

As the landscape of data storage and usage has been reshaped by the growth of big data, organizations have increasingly turned to data science to inform strategy and decision-making. But meaningful insights aren’t possible without a clear view of where data comes from and how it changes over time. To support this, organizations rely on data lineage tools in combination with data catalogs. While lineage tools map the historical flow and transformation of data using metadata, data catalogs organize this metadata into a centralized, searchable index of enterprise data assets. Together, they provide both the technical visibility and the user-friendly interface needed for effective data discovery, governance, and reuse.

Types of Data Lineage

There are several key techniques used to perform data lineage, each offering a different perspective on how data moves and transforms throughout its lifecycle. These approaches help organizations understand where data originates, how it changes, and where it ultimately ends up.

- Backward data lineage: Backward data lineage refers to tracing data in reverse starting from a final report, dashboard, data product, or application output, and working backwards through the systems and transformations to uncover its original sources. This technique is particularly useful for auditing, troubleshooting data discrepancies, and validating data accuracy, because it allows data stewards or analysts to verify the integrity of a final dataset by understanding exactly how it was produced.

- Forward data lineage: Forward data lineage, in contrast, starts at the data source and follows the path that data takes through various processes, systems, and transformations until it reaches its final destination or use case. This approach is commonly used for impact analysis and change management. If a data engineer needs to modify a source table or field, forward lineage helps determine which downstream systems, dashboards, or machine learning models will be affected by the change. It allows teams to anticipate potential disruptions and make informed decisions about system updates or schema modifications.

- End-to-end data lineage: End-to-end data lineage is the most comprehensive approach, providing a full-spectrum view of data movement from its point of origin all the way to its final use. It combines both forward and backward lineage to offer a 360-degree perspective of how data flows across an entire ecosystem. This holistic view is essential for maintaining compliance with regulations, ensuring data quality at scale, enabling enterprise-wide data governance, and supporting advanced analytics or AI initiatives. End-to-end lineage maps out each step in the data pipeline covering ingestion, transformation, storage, integration, and consumption, making it easier to manage dependencies and ensure accountability throughout the data lifecycle.

The Importance of Data Lineage

Data lineage is important for several reasons, including:

- Improved data quality: By tracking the flow of data throughout an organization, data lineage can help identify potential issues or errors in the data, allowing organizations to take steps to improve data quality.

- Regulatory compliance: Many industries are subject to regulations around data privacy and security. Data lineage can help organizations demonstrate compliance with these regulations by providing a detailed record of how data is collected, processed, and shared.

- Data governance: Data lineage is an important part of overall data governance, providing a comprehensive view of how data is used and managed within an organization.

- Data analysis: Fine-grained data lineage can provide important information for data analysis, helping to identify patterns and relationships in the data and supporting data-driven decision making.

- Troubleshooting: When issues arise with data quality or reliability, data lineage can help identify the source of the problem, making it easier to troubleshoot and resolve issues.

Data lineage is an important tool for effective data management, allowing organizations to track the flow of data throughout their systems and ensure that data is accurate, reliable, and trustworthy.

Data Lineage Tools and Techniques

A data lineage tool should have the following capabilities:

- Data ingestion: The tool should be able to ingest data from a wide variety of sources, including databases, data warehouses, and data lakes.

- Automated lineage tracing: The tool should be able to automatically trace the lineage of data as it moves through an organization’s systems, without the need for manual intervention.

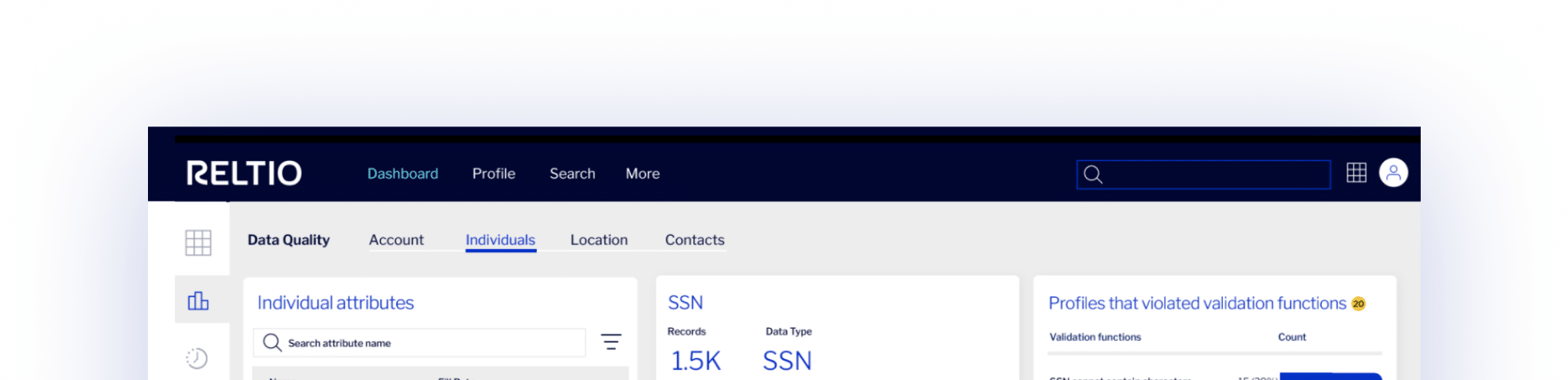

- Visualization: The tool should provide a graphical representation of the data lineage, making it easy for users to understand the flow of data and identify potential issues.

- Metadata management: The tool should be able to capture and manage metadata associated with the data, including information about data quality, security, and compliance.

- Impact analysis: The tool should be able to perform impact analysis, allowing users to understand the potential impact of changes to data sources or processing systems on downstream data consumers.

- Collaboration: The tool should support collaboration among data users and stakeholders, allowing them to share insights and collaborate on data-related tasks.

- Integration: The tool should be able to integrate with other data management tools and systems, including data quality tools, data cataloging tools, and data integration tools.

A good data lineage tool should provide a comprehensive view of an organization’s data flow, supporting effective data governance, data analysis, and troubleshooting.

Data-Tagging

Data tagging is the process of attaching descriptive labels or tags to data to help categorize and organize it. Tags can be used to describe different attributes of the data, such as its type, source, format, or content, as well as its purpose, audience, or usage.

Data tagging is a key part of metadata management, as it provides a way to capture and manage metadata associated with the data. By tagging data with descriptive labels, organizations can improve the discoverability and usability of their data, making it easier for users to find and understand the data they need.

Some common types of data tags include:

- Type tags: Used to categorize data based on its format or structure, such as “CSV”, “JSON”, or “XML”.

- Source tags: Used to identify the source of the data, such as the name of the database or the system that generated the data.

- Content tags: Used to describe the content of the data, such as keywords, topics, or themes.

- Usage tags: Used to indicate the intended use or audience for the data, such as “internal use only” or “customer-facing”.

Data tagging can be done manually, by users adding tags to the data themselves, or automatically, using tools or algorithms to extract tags from the data or from other sources of metadata.

Pattern-Based Lineage

Pattern-based lineage is a type of data lineage that uses patterns to infer the lineage of data. Instead of relying solely on metadata or tracking data flow explicitly, pattern-based lineage uses patterns in the data processing to identify how data is transformed and moved through different systems.

Pattern-based lineage is particularly useful in complex data architectures, where data may be processed through multiple systems and technologies in a non-linear way. By identifying patterns in the data processing, pattern-based lineage can provide a more comprehensive view of the data flow than traditional metadata-based lineage.

Some common patterns used in pattern-based lineage include:

- Data transformation patterns: Patterns that describe how data is transformed, such as filtering, aggregating, or joining data.

- Data movement patterns: Patterns that describe how data is moved between systems or applications, such as loading data into a database or streaming data between services.

- Data quality patterns: Patterns that describe how data quality is managed and enforced, such as validating data or handling missing or incomplete data.

Pattern-based lineage can be implemented using a variety of techniques, such as machine learning algorithms, data mining, or rule-based systems. The goal is to identify patterns in the data processing that can be used to infer the lineage of data and provide a more complete picture of the data flow.

Parsing-Based Lineage

Parsing-based lineage is a type of data lineage that involves parsing data flows and processes to identify how data is transformed and moved through different systems. In other words, parsing-based lineage uses automated parsing techniques to extract information from data and process flows in order to identify the source, processing steps, and destinations of data.

Parsing-based lineage involves parsing or scanning different types of data sources, such as SQL queries, ETL workflows, logs, and code to extract information about the data lineage. This information is then used to generate a visual representation of the data flow, which can help organizations understand how data is being used and processed across different systems.

Parsing-based lineage can be useful for organizations that need to manage complex data architectures or work with large volumes of data. By automating the parsing process, parsing-based lineage can help organizations quickly identify data lineage, pinpoint data quality issues, and troubleshoot problems in the data flow.

However, parsing-based lineage does have some limitations. Because it relies on automated parsing techniques, it may not always capture all aspects of the data flow, and may require manual intervention or human interpretation to accurately represent the data lineage. Additionally, it may not be able to capture certain types of data transformations or processing steps that are not easily parsed from the data source.

Ready to see it in action?

Get a personalized demo tailored to your

specific interests.